| Home Page | Overview | Site Map | Index | Appendix | Illustration | About | Contact | Update | FAQ |

|

The Monte Carlo Method was invented by Stanislaw Ulam (1909-1984). He was born in Poland and became a US citizen in 1941. During WWII, he was a team member of the Manhattan Project making nuclear bomb. In particular, he worked on the neutron diffusion problem in "gun-type" bomb. It leads him to further investigation of numerical computations for very complicated systems. With the newly availability of general-purpose electronic computer, he proposed a new way to estimate statistically the probability of a certain process. This is the original idea of Monte Carlo Method named in reference to his uncle's fondness for gambling in Casino Monte Carlo (Figure 01). |

Figure 01 Monte Carlo Casino [view large image] |

|

The points are inserted randomly into the graph, accuracy depends on large number of points. |

Figure 03 |

See "Table of Integrals". |

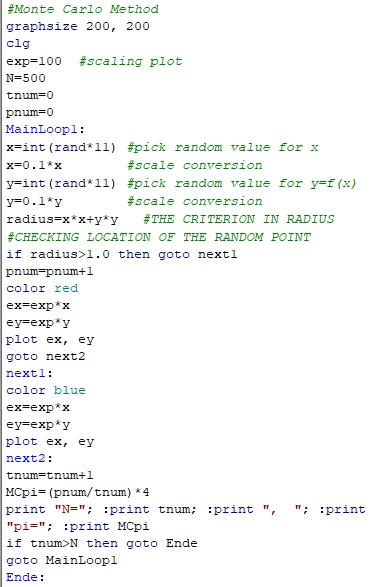

. Due to limitation of random number generation in the programming language Basic 256, it returns a value of

. Due to limitation of random number generation in the programming language Basic 256, it returns a value of  ~ 3.0 instead of 3.1416. Anyway, this is just a demonstration of principle (see the algorithm in Figure 05).

~ 3.0 instead of 3.1416. Anyway, this is just a demonstration of principle (see the algorithm in Figure 05). |

|

. An algorithm similar to the one shown in Figure 05 is still workable if the checking of the random data (whether below or above the data point) is performed against the array. . An algorithm similar to the one shown in Figure 05 is still workable if the checking of the random data (whether below or above the data point) is performed against the array. |

Figure 04 |

Figure 05 Algorithm |

|

The area under the irregular curve is A = (0.4x0.4) + (0.4x0.4)/2 = 0.24 . Visual counting obtains n = 35 points inside the area, the total number of points N = 5x9 = 45 giving the ratio r = n/N = 0.78. Since the total area is 0.4x0.8 = 0.32, A ~ 0.78x0.32 = 0.25 QED (See Figure 06). |

Figure 06 MC for Irregular Function [view large image] |

Indeed, the area under this shape can be computed with an array as suggested above producing the same value of A ~ 0.24 (See the corresponding algorithm). |

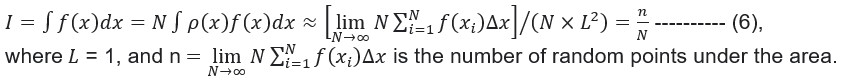

(xi) = 1/N with

(xi) = 1/N with  Then :

Then :

(x) = constant for this particular case. In general, it could be any function such as the "Binomial Distribution" shown by the red dotted area in Figure 08.

(x) = constant for this particular case. In general, it could be any function such as the "Binomial Distribution" shown by the red dotted area in Figure 08.

|

C(N,k)=N!/[k!(N-k)!] ---------- (7), where the factorial q! = q(q-1)(q-2)...1, and 0! = 1 (see red dots in Figure 08, also see a "table"). The Binomial probability is defined by : PBinomial = (a + b)N =  C(N,k)aN-kbk C(N,k)aN-kbk  1, ---------- (8) 1, ---------- (8)where a = p, b = (1 - p). |

Figure 07 Binomial Distribution |

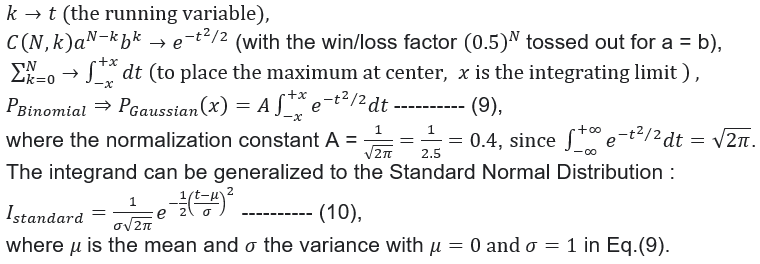

In case a = b, it is called Gaussian or Normal probability PGaussian which is symmetrical with respect to its maximum. For a  b, PBinomial reveals a skew from symmetry as shown in Figure 07 for p = 0.7. PBinomial goes-over to continuum as N b, PBinomial reveals a skew from symmetry as shown in Figure 07 for p = 0.7. PBinomial goes-over to continuum as N  with the following transitions : with the following transitions : |

|

See the "computer program" for detail. Essentially, the program scales the Binomial probability on an 1x1 square graph. Figure 08 shows the area under the probability curve in red. The counting ratio between the numbers under the curve and the whole square is : ratio = 0.4 similar to the area by numerical integration. This scaled value of total probability can be reverted to "1" with the multiplication of  . .

|

Figure 08 |

(x) mentioned earlier. For example :

(x) mentioned earlier. For example :

|

In general, the integrand can be very complicated and becomes more formidable by the addition of  (x). Fortunately, many standard distributions are available in the form of "subroutines" for public use (at a fee). For example, with f(x) = x2, it can be evaluated using 1/2 of the array for the Binomial distribution from the previous examples. Figure 09 shows the integrand obtained by the Monte Carlo method in few red dots (see "computer program" + scaling ). The solid curves are evaluated by conventional method for comparison. Integration can be performed by the Monte Carlo method as usual if each piece of the integrand is stored in another array. (x). Fortunately, many standard distributions are available in the form of "subroutines" for public use (at a fee). For example, with f(x) = x2, it can be evaluated using 1/2 of the array for the Binomial distribution from the previous examples. Figure 09 shows the integrand obtained by the Monte Carlo method in few red dots (see "computer program" + scaling ). The solid curves are evaluated by conventional method for comparison. Integration can be performed by the Monte Carlo method as usual if each piece of the integrand is stored in another array.

|

Figure 09 Find Integrand with Probability Density by Monte Carlo [view large image] |

Essentially, the program picks a random value of x by which f(x) is multiplied to  (x). The integrand is evaluated as f(x) (x). The integrand is evaluated as f(x) (x). A corresponding curve would emerge in a plot with increasing number of iterations, or by just running the x index from xi to xf (5 to 10 here). (x). A corresponding curve would emerge in a plot with increasing number of iterations, or by just running the x index from xi to xf (5 to 10 here). |

|

|

together with another normal distribution (un-correlated) into the process (see an example in a "computer program"). Figure 10 shows the result pictorially in a 3-D graph (no such convenience beyond the bivariate). The newly created normal distribution can be stored in another array for further processing, but the size of the array and computer time rapidly become un-manageable as the number of dimension increases. |

Figure 10 |

Figure 11 |

Assuming unlimited computing resources, there is no problem extending the procedure to multivariate functions (dimension > 2). As shown in Figure 11, the probability density distribution  (x1,x2,...) can be in various forms to generate f( (x1,x2,...) can be in various forms to generate f( ), then goes on to produce the integral of the whole thing. ), then goes on to produce the integral of the whole thing.

|

) is similar to f(x)

) is similar to f(x) (x) in previous notation,

(x) in previous notation,  (x) is the known density distribution, while f(x) is an arbitrary function.

(x) is the known density distribution, while f(x) is an arbitrary function.

|

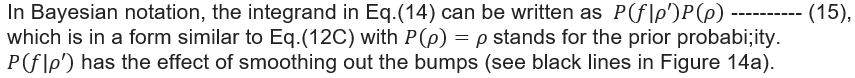

Bayesian Theorem - It introduces a new kind of probability P(H|D), which relates the probability of "event H" given that "event D" has taken place. As shown in Figure 12, P(H|D) = [P(D|H) x P(H)] / P(D) ---------- (11), where P(H) and P(D) are the probabilities of observing H and D independent of each other. |

Figure 12 Bayesian Probability |

Actually, it is somewhat mislabelled for the event H as "Hypotheses" (which causes so much distress for Calvin the comic character, see insert in Figure 12), and D as "Data". |

|

From available statistical data, probability of smoke without fire P(B) ~ 50%, i.e., rather common and fire without smoke P(A) ~ 1%, i.e., very rare. Giving that the probability of smoke with the apperence of fire is almost certain P(B|A) ~ 99%, P(A|B) = [P(B|A) x P(A)] / P(B) = (0.99 x 0.01) / 0.5 ~ 2%. |

Figure 13 Smoke and Fire [view large image] |

Lastly, the correlated probability (plus) [P(A|B) x P(B)] = [P(B|A) x P(A)] ~ 1%, with the appearance of either fire or smoke.

|

1 by definition (actually for any probability), in this special case of P(B|A) ~ 1, violation occurs if P(A) > P(B); then we would live in a weird world of more fire than smoke.

1 by definition (actually for any probability), in this special case of P(B|A) ~ 1, violation occurs if P(A) > P(B); then we would live in a weird world of more fire than smoke. |

|

Figure 14 |

|